Take a small number and keep hitting it with the function \(f(x) = 2.8x(1-x)\). It converges to a "fixed point", meaning a number \(x\) where \(x = f(x)\).

So, the red line in the above "cobweb plot" converges to where the line \(y = x\) and parabola \(y = 2.8x(1-x)\) cross.

But what if we replace 2.8 by some bigger number?

Take a small number. Keep hitting it with the function \(f(x) = rx(1-x)\).

When \(1 \lt r \lt 2\) it'll keep increasing and quickly converge to a fixed point, where \(x = f(x)\).

When \(2 \lt r \lt 3\) it'll oscillate but still converge to the fixed point.

When \(r \gt 3.56994672\dots\) you usually get chaos!

For \(3 \lt r \lt 3.56994672\dots\) interesting things happen. The period of the oscillations keep doubling! You can see that at left here.

The period doubles at \(r = 3.44949\dots\), and again at \(r = 3.54409\dots\), and so on.

This is called "period doubling to chaos".

You can see the period doubling to chaos here: for each value of \(r\), the "attractor" for \(f(x) = rx(1-x)\) is shown as a bunch of points above that \(r\). For low \(r\) there's just one: the fixed point. Then there are 2, then 4, and so on. At \(r = 3.56994672\dots\), chaos breaks out! But it's full of patterns.

Zooming in on the chaotic region \(r \gt 3.56994672\dots\), we see beautiful things. There are regions of stability that end in their own tiny copies of the "period doubling to chaos" picture! For example there are three at \(r = 3.82843\dots\).

You could spend your life on this. Fortunately other people already, so we can just read what they found! You can start here:

It has lots of references to books and papers of various levels of difficulty. When you get really serious, try this amazing book:

All the images in this diary entry are from Wikicommons. Click on

them to see who created them.

March 20, 2019

Poke a Koch-snowflake-shaped hole in a Koch snowflake and you get... six more Koch snowflakes! You just can't kill it!

I got this from Derek Wise's blog, where he explains how to draw fractals using monads in Haskell:

Nidhal Selmi drew it. It's a blend of the Sierpinski triangle, a famous fractal, and the Penrose triangle, a famous 'impossible object'.

Let me explain how it's connected to sheaf cohomology.

First, the Penrose triangle! Penrose has pointed out that this is 'locally possible': there's nothing wrong with any small piece. But if you follow it all the way around, you can't consistently interpret it.

This is precisely the sort of thing that sheaf cohomology can detect.

Second, the Sierpinski triangle: a triangle with triangular holes poked out of it, in an iterated way.

While the Penrose triangle only has one hole, this has a countable infinity of them... each giving the opportunity for an impossible twist!

Now, roughly speaking, a 'sheaf' on a topological space \(X\) assigns a set of 'sections' \(F(U)\) to any open subset \(U\) of that space, with the ability to restrict sections to smaller subsets, and to glue sections that agree on overlaps to get sections on bigger subsets! Here's the precise definition:

Sheaves let us study local versus global issues.

The 'first cohomology' of a sheaf describes the set of things that locally look like sections but perhaps do not globally come from sections.

In this paper, Penrose showed that his triangle gives a nontrivial element of first cohomology:

So, you should be able to work out the sheaf cohomology of the Sierpinski triangle and show Nidhal Selmi's impossible object gives an interesting element of the first cohomology!

I would do this here, but I need to get some work done. If If you want to learn more about sheaves and impossible objects, besides Penrose's article I recommend this:

Here's a nice animation by Tom Ruen:

The extremely rainy winter has produced a 'superbloom' in many parts of Southern California, and the hills near us are full of purple flowers. These are Campanula medium, known as 'Canterbury bells' for their bell-like flowers:

But there are many other flowers in the hills. One of the most common, toughest plants in these dry areas is the brittlebush, Encelia farinosa. Its gray-green leaves remain green when all the grass has dried up and turned brown. But now it's blooming magnificently:

The California poppy, Eschscholzia californica, is running rampant in many places. Here you can find small patches among other flowers:

There are other even subtler flowers, like the spotted hideseed, Eucrypta chrysanthemifolia:

March 26, 2019

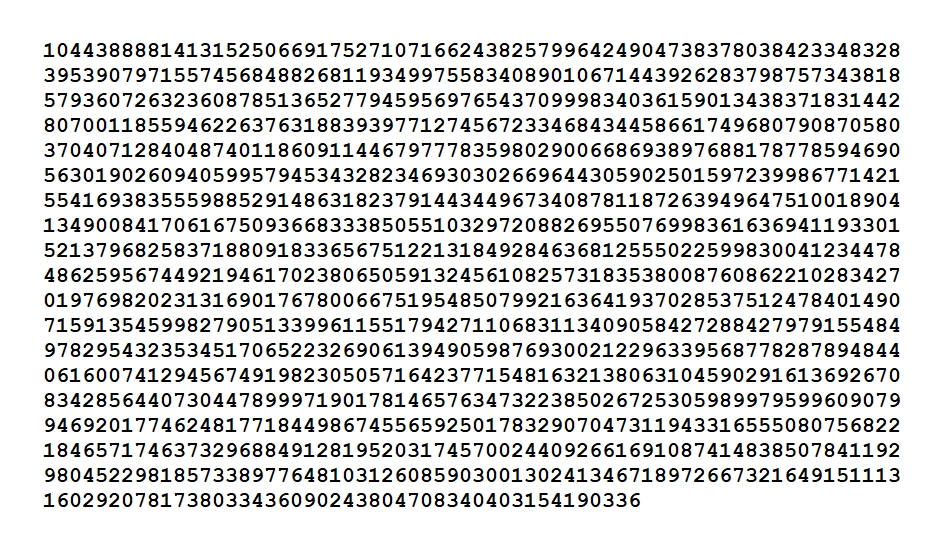

Wow! A new paper claims to have found a more efficient algorithm for

multiplying large numbers. It supposedly runs in \(O(n \log n)\) time

— this had never been achieved before. One catch: it only works on

numbers with at least this many digits:

And I don't mean as many digits as this number has. I mean this number of digits.

Yes: their algorithm only works for numbers that have at least \(2^{4096}\) digits. If you're multiplying numbers with fewer digits, they suggest using another method.

The paper is here:

In 2007 there was another big step, Fürer's algorithm. By 2015 Harvey, van der Hoeven and Lecerf used a new algorithm to whittle the time down to \(O(n \log(n) 2^{3 \mathrm{lg}^*(n)})\), where \(\mathrm{lg}^*(n)\), the iterated logarithm, is a function that grows so slowly all the stars will burn out long before it reaches 6. It's basically the inverse of tetration:

In 2018, Harvey and van der Hoeven replaced the "3" with a "2" and knocked the time down to \(O(n \log(n) 2^{2 \mathrm{lg}^*(n)})\). So it would be really great if they've now succeeded in getting \(O(n \log(n))\).

Here are two consequences of the new result, if it's true:

We're really bad at proving lower bounds on computational complexity. We may be missing fundamental ideas. Or maybe Gödel's theorem kicks in, and such results are simply unprovable from the usual axioms of mathematics.